Challenges

The time-intensive nature of transcription has made many oral history collections an undervalued format in digital initiatives. Meeting accessibility standards involves not only transcribing recordings but also presenting them in an intuitive, navigable digital interface. OHD developer Devin Becker’s solution displays the audio at the top of the page, followed by a visualization of the entire recording with color valued tags, a key to the tags, a search bar for keyword queries and the transcription below. This allows researchers to follow along with the timestamped transcript as the audio plays.

Despite advancements in the oral history player interface, the primary hurdle in developing these collections has been the initial transcription process. Since OHD’s development in 2016, machine learning speech detection abilities have improved considerably. However, earlier free options were either so poor that they were negligible to working from scratch, or they were prohibitively expensive for a higher education institution. Completely human driven transcription has its own challenges: it’s tedious, slow moving work that, without close supervision, can result in uncontrolled vocabulary, knowledge gaps, and biases from linear listening.

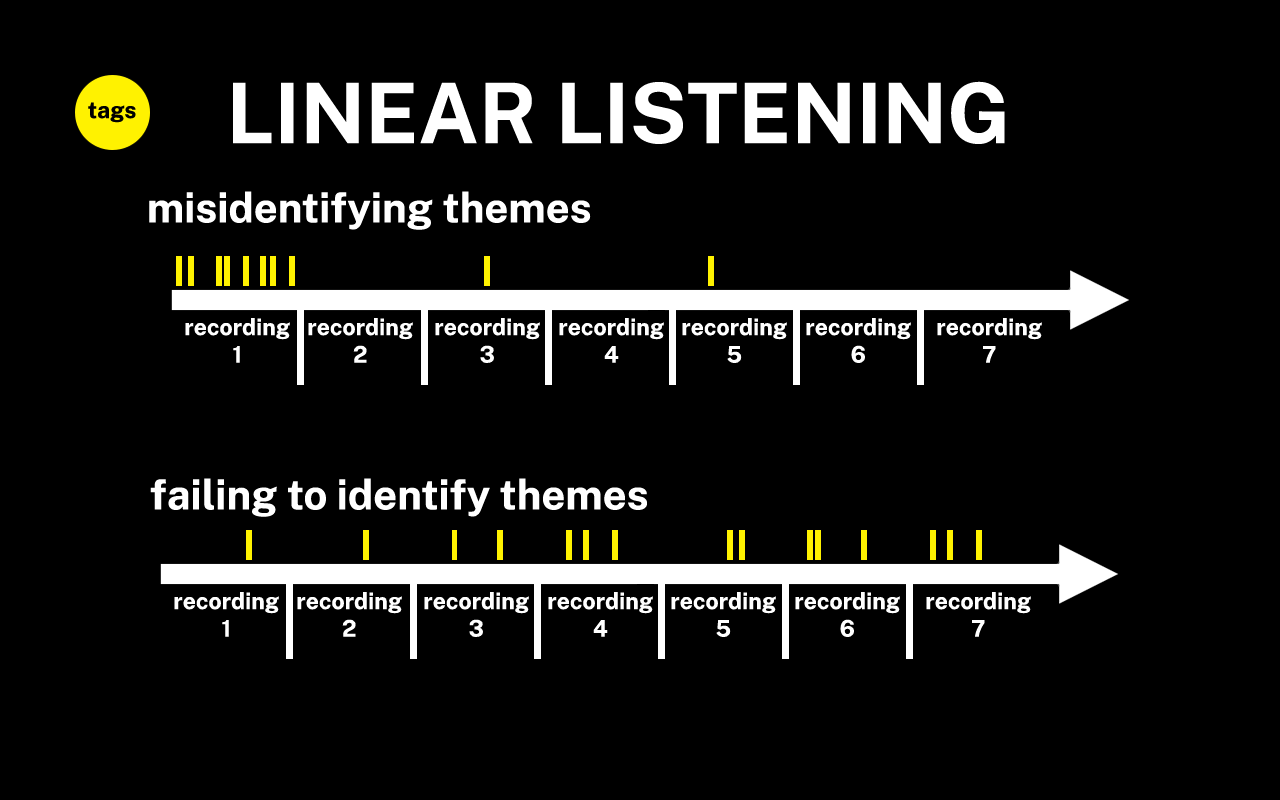

Linear listening, or creating tags by listening to an oral history collection from beginning to end, may mislead transcribers by establishing repeating themes that don’t occur across the collection or missing themes that only begin to appear in later recordings. The name of this paper, distant listening, is an alternate approach which text mines combined transcripts and generates tags before the student worker begins the copy editing process, with the goal of producing richer, more accurate data, ultimately allowing researchers to identify more connections across entire oral history collections.